All products featured are independently chosen by us. However, SoundGuys may receive a commission on orders placed through its retail links. See our ethics statement.

How do hearing aids work?

March 7, 2023

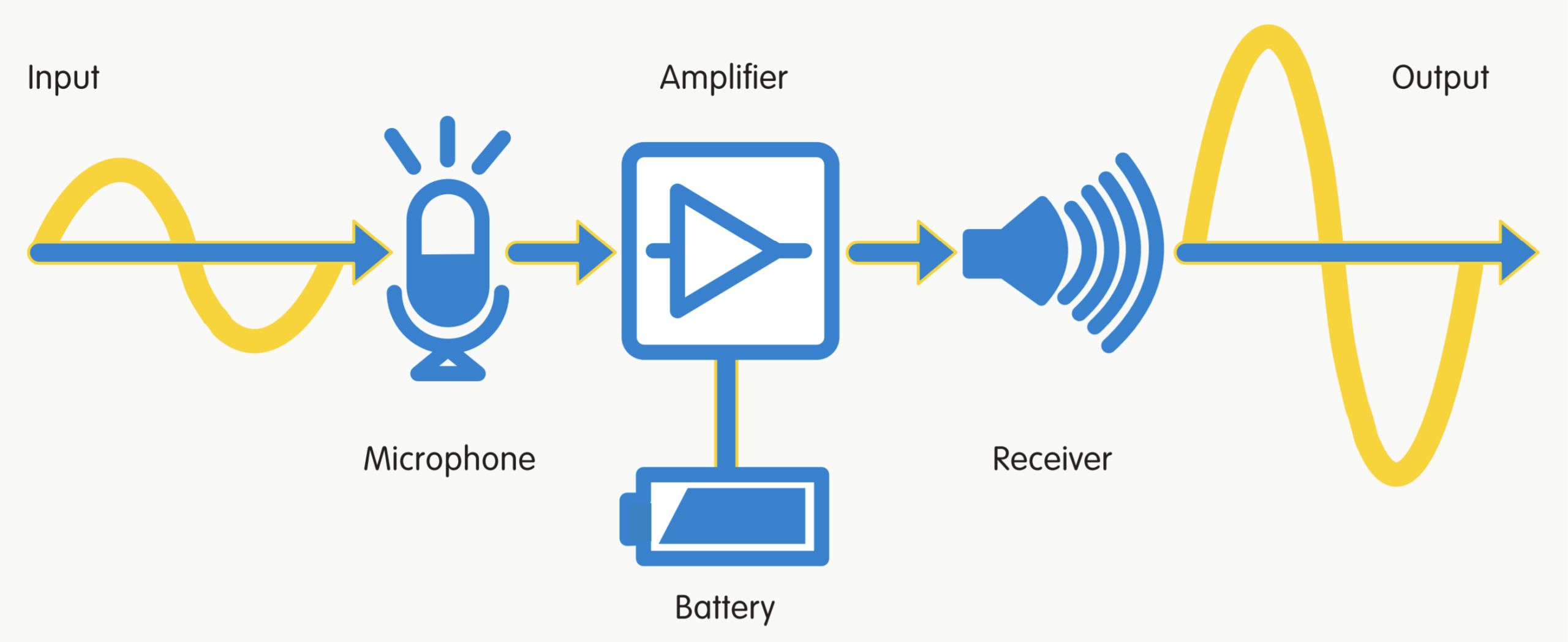

At its core, every hearing aid works the same way: a microphone captures sound, an amplifier makes the sound louder, and a receiver (similar to a speaker) outputs the amplified sound into the wearer’s ear.

This seems simple enough, yet you can choose from a number of different hearing aids and features. What exactly happens inside a hearing aid, why does it never sound as good as natural hearing, and why should you use a hearing aid in the first place?

To answer these questions, we have to back up a little.

Editor’s note: this article was updated on March 7, 2023, to address frequently asked questions.

How does human hearing work?

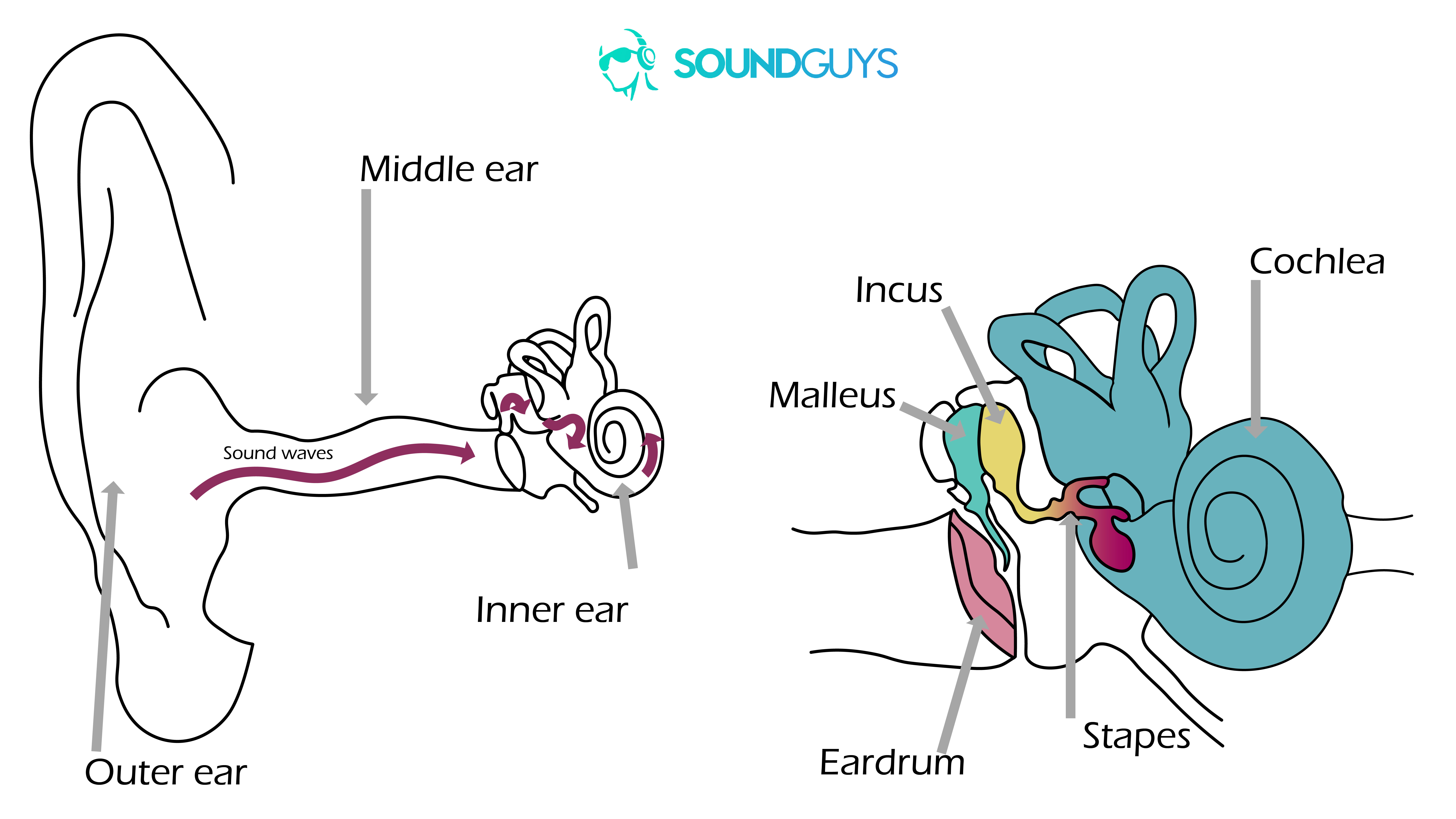

The visible outer part of your ear, the pinna, captures and funnels sound to the ear canal and middle ear, eventually it reaches the inner ear, followed by the auditory nerve, and finally the auditory cortex; the region in the brain where we perceive sound. The sound is subject to processing at every step of the way.

The outer ear

To experience how the pinna works, try holding your right hand behind your right ear. By expanding the physical reach of your ear, you should notice that some sounds seem a bit louder.

However, the pinna isn’t just a natural amplifier. Its ridges and folds also reflect and absorb sounds, giving the sound waves unique spectral characteristics. As the sound travels through the ear canal, it’s subject to further processing.

The middle ear

Like the pinna, the ear canal attenuates some frequencies, while emphasizing others. The only things that can pass through a healthy eardrum (tympanic membrane) are sound waves. On the inner side, the membrane connects to a series of small bones; the ossicles.

The interaction of the membrane with the ossicles transforms the sound waves into mechanical energy. That’s necessary because otherwise the air-based sound waves would simply bounce off of the higher density liquid in the cochlea and reflect back into the ear canal. By matching the impedance (resistance) of the liquid, sound can pass into the cochlea.

The inner ear

In the cochlea, hair cells convert acoustic energy into electrochemical nerve impulses, a process known as transduction. The outer hair cells act as amplifiers, which helps with the perception of soft sounds, while the inner hair cells work as transducers. When the nerve impulse is strong enough, the inner hair cells trigger the release of neurotransmitters, activating the auditory nerve, which in turn relays the signal to the auditory cortex in the brain.

Where does hearing loss happen?

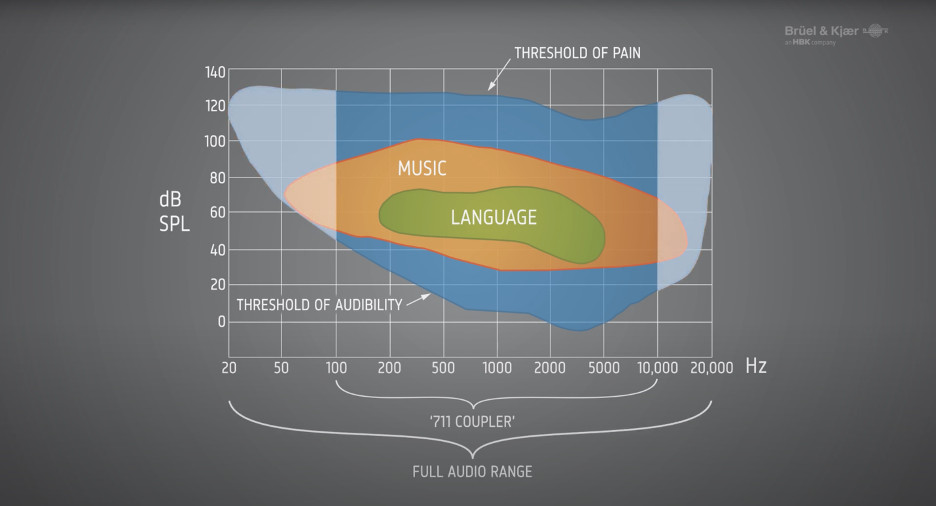

In young people, healthy hearing spans a frequency range from 20-20,000Hz. As we age, we progressively lose the ability to hear high-frequency sounds.

Age-related and noise-induced hearing loss typically result from damage to the hair cells; this is also known as sensorineural hearing loss. When hair cells stop functioning, we lose sensitivity to certain frequencies, meaning some sounds seem much quieter or become inaudible. For as long as sounds remain audible, sensorineural hearing loss responds well to amplification.

Hearing loss can also originate from damage to other parts of the ear, in which case hearing aids might be of limited use. For example, when the eardrum or ossicles can’t transmit sound, amplification alone might not be able to bypass the damaged middle ear to reach the cochlea.

How do digital hearing aids process sound?

Hearing aids do more than amplify sounds. To create a custom sound profile that improves speech recognition, they process sounds in multiple ways.

Microphones

Like the human ear, digital hearing aids don’t process sound waves directly. First in line are the microphones. They act as transducers, capturing mechanical wave energy and converting it into electrical energy.

Modern hearing aids come with two microphones:

- The omnidirectional microphone picks up sounds from any direction.

- The directional microphone targets sounds coming from the wearer’s front; its main focus is capturing speech.

The directional microphone can be fixed or adaptive. An adaptive directional microphone can turn on or off as needed. When turned on, it automatically switches between different directional microphone algorithms, depending on the listening environment.

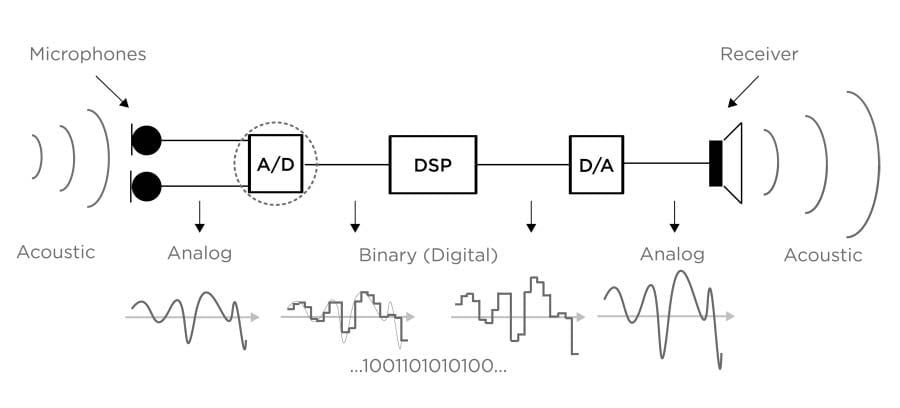

Analog to Digital Conversion

The analog signals coming from the microphones are converted into a digital signal (A/D). The binary signal is subject to digital signal processing (DSP). The processed digital signal is then converted back into an acoustic signal (D/A), which enters the ear canal through the receiver.

Much can go wrong during these conversions. The Hearing Review notes:

This (conversion) process, if not carefully designed, can introduce noise and distortion, which will ultimately compromise the performance of the hearing aids.

A key issue of the A/D conversion is its limited dynamic range. Average human hearing spans a dynamic range of 140dB, helping us hear anything from rustling leaves (0dB) to fireworks (140dB). The still common 16-bit A/D converter is limited to a dynamic range of 96dB, which, like a CD, could range from 36-132dB. While raising the lower limit eliminates soft sounds, for example whispering at 30dB, lowering the upper limit yields poorer sound quality in loud environments.

Sounds that don’t make it through the converter can’t be amplified.

Amplification and frequency compression

Amplification is a key function of hearing aids, and it’s delicate.

Generally, hearing loss reduces an individual’s dynamic range, often to as little as 50dB. This narrows the range that sounds need to be compressed into to remain audible and yet sound comfortable.

While linear amplification would make soft sounds louder, ideally audible, it would also make loud sounds uncomfortable or even painful. Hence, most hearing aids use wide dynamic range compression (WDRC). This compression method strongly amplifies soft sounds, only applies a moderate amount of amplification to medium sounds, and barely makes loud sounds any louder.

Channels and bands

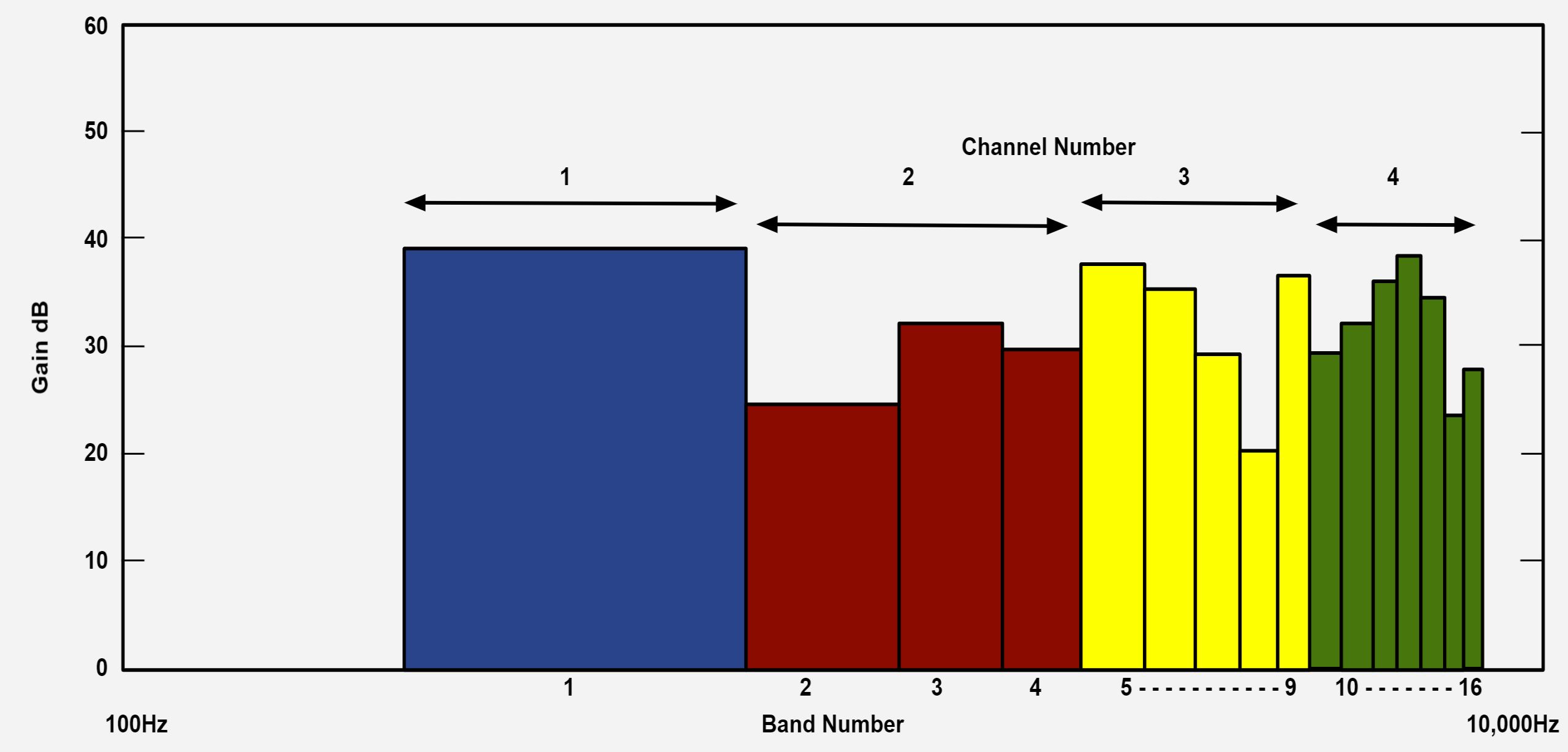

Modern hearing aids process sounds based on the wearer’s hearing loss. To do that, they split up frequencies into a number of channels, anywhere from three to over 40. Each channel covers a different frequency range and is analyzed and processed separately.

While channels determine processing, bands control the volume or gain at different frequencies. Most modern hearing aids have at least a dozen frequency bands they can amplify. This is similar to headphone or speaker equalizers, where you can manually raise the level of a specific frequency range, for example, to boost the bass.

The upside of having more channels and bands is increased finetuning. The hearing aid can better separate speech from background noise, cancel out feedback, reduce noise, and match the volume and compression at different frequencies to the wearer’s specific needs.

The downside of having additional channels is longer processing times. This can be a problem for people who can still hear environmental sounds without a hearing aid. Studies have shown that a processing delay of three to six milliseconds gets noticed as degraded sound quality.

So, what kind of processing happens at the channel level?

Custom programs and artificial intelligence for different environments

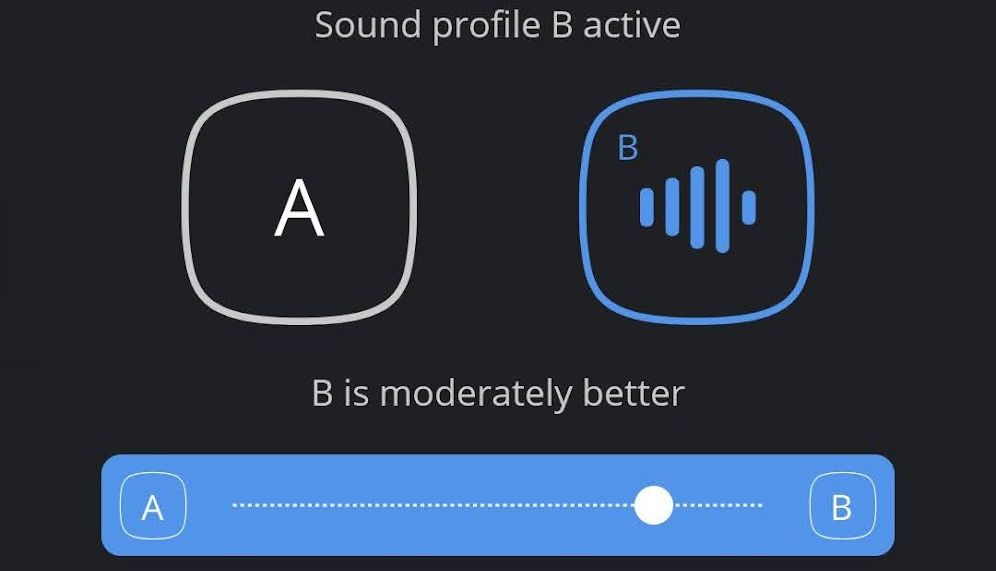

No single hearing aid setting is perfect for all occasions. When you’re at home listening to quiet music, you’ll need different processing than when you’re out with friends in a loud environment. To address this, most hearing aids come with different programs that let you switch from a default program to music-friendly processing or to a more aggressive elimination of background noise.

While your hearing care provider can customize these programs to your hearing, they can only cover a limited number of scenarios. And since you’ll be in a quiet environment for the hearing aid fitting, they have to guesstimate your preferences for different sound scenes. That’s where artificial intelligence (AI) comes in.

Equipped with machine learning abilities, the AI can learn about different environments, tap into the data from other users, predict the settings you’ll find most pleasant, and automatically adapt its processing.

Frequency shifting and lowering improves speech recognition

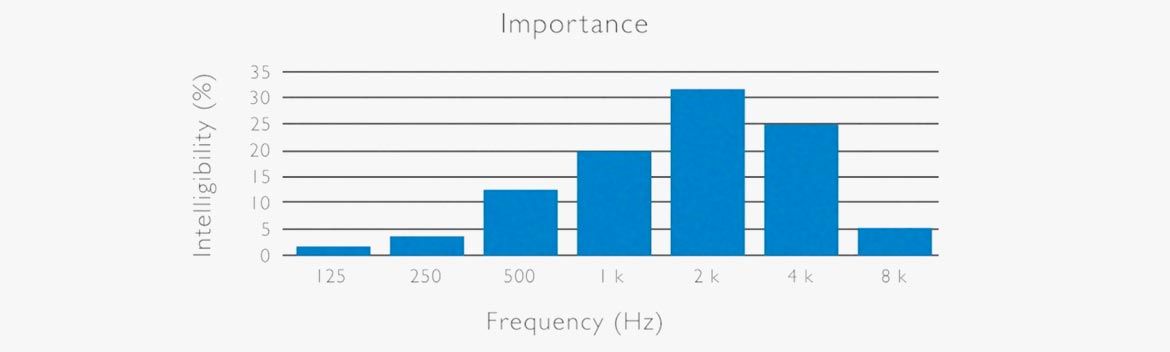

We’ve already established that hearing aids can’t restore normal hearing because they can’t fix what’s broken. Their main task is to restore speech recognition by working with the remaining hearing ability. But what happens when hearing loss affects the frequencies that cover speech?

In non-tonal (Western) languages, the frequencies key to speech intelligibility range from 125-8,000Hz. That’s the exact bandwidth covered by standard hearing tests and hearing aids. Remember, human hearing can reach up to 20,000Hz, typically 17,000Hz in a middle-aged adult.

When hearing loss is so severe, that it affects frequencies below 8,000Hz, hearing aids can shift those higher frequency sounds into a lower frequency band. Unfortunately, transposing sounds in this manner creates artifacts.

The Hearing Review explains:

Some reported that the transposed sounds are “unnatural,” “hollow or echoic,” and “more difficult to understand.” Another commonly reported artifact is the perception of “clicks” which many listeners find annoying.

Even more recent studies probing new algorithms don’t show significant benefits of frequency lowering. However, as technology advances, this may be a feature to watch out for in the future.

How do various types of hearing aids differ?

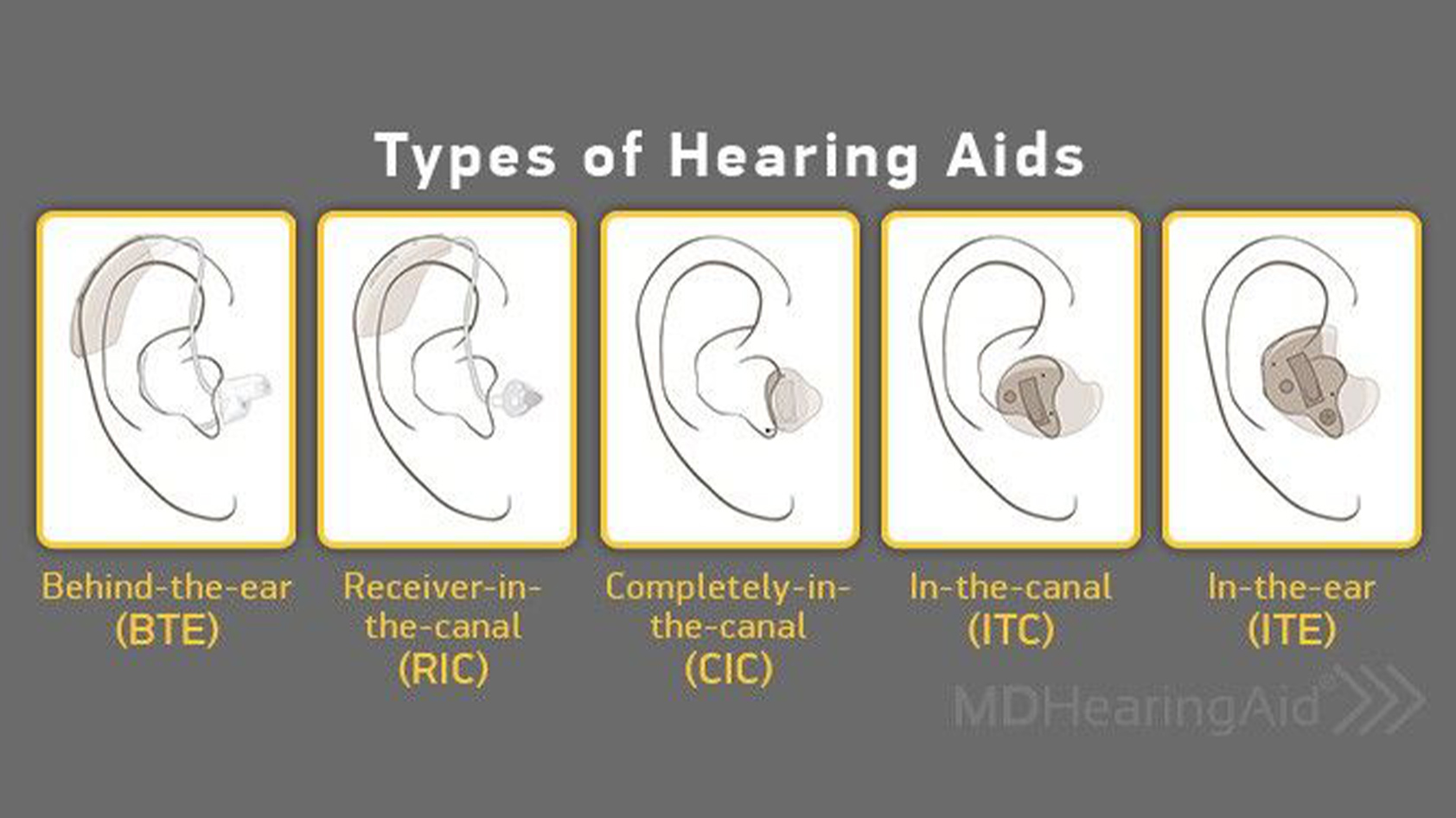

Not all hearing aids feature the same technology. Different types of hearing aids exist because they address different types of hearing loss. More severe hearing loss requires more processing.

The smallest and least visible units focus on amplification and simple processing. Larger units, like behind-the-ear (BTE) or receiver-in-the-canal (RIC) can include an additional microphone, a second processor, a larger battery, and apply more complex algorithms.

What are the benefits of hearing aids?

On a physiological level, hearing aids do much more than restoring speech recognition.

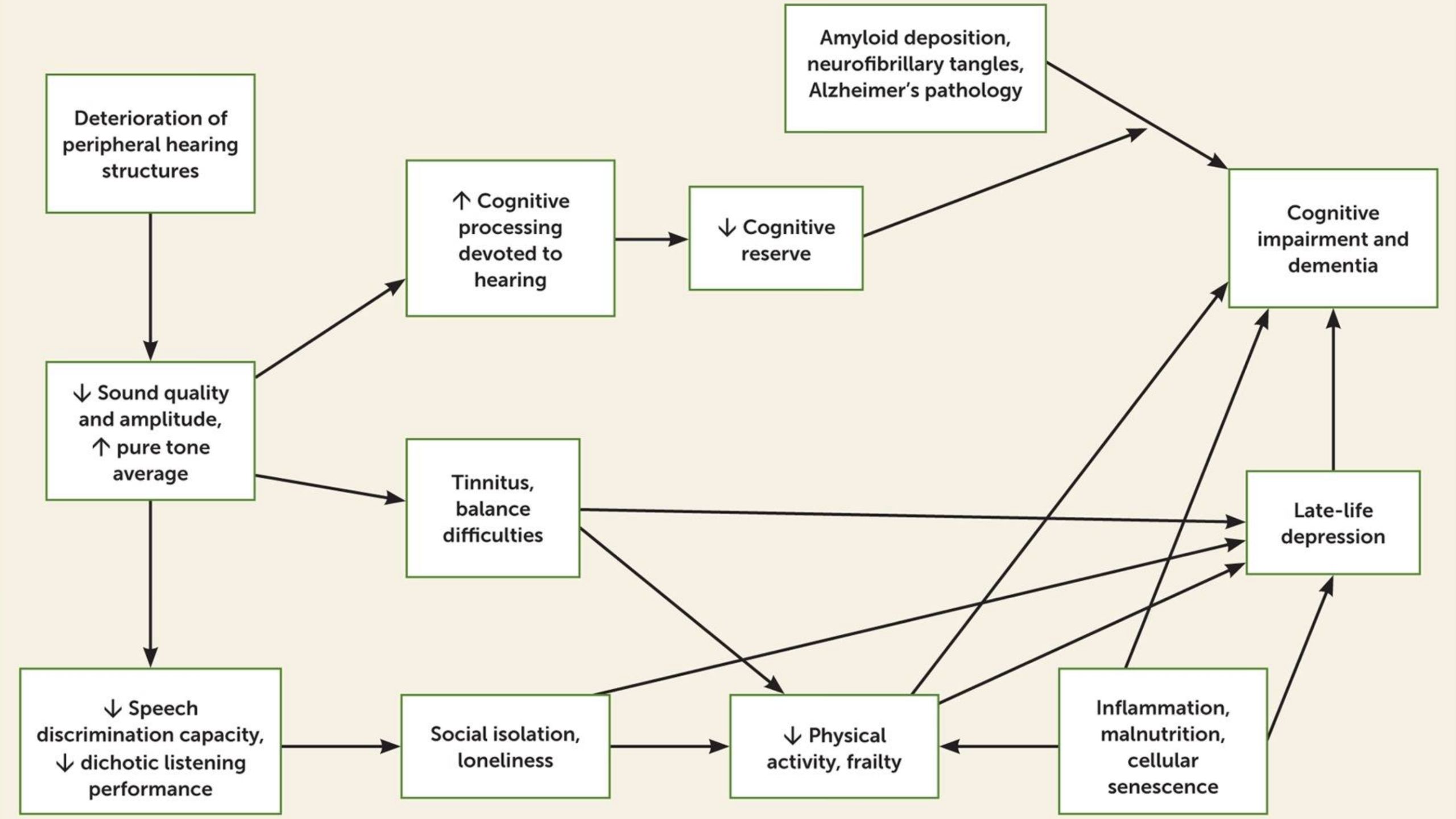

Multiple studies link age-related hearing loss to dementia, Alzheimer’s disease, depression, and other forms of cognitive decline. One proposed reason is social isolation; people with hearing loss avoid socializing. Why do they retreat? Even with mild to moderate hearing loss, the brain has to work harder to process speech, which can be exhausting and frustrating. Assuming the brain has limited resources, this increase in auditory processing may also impair memory. The cognitive load theory is compelling and well-studied, but it’s not the only possible explanation.

Other potential reasons for the correlation of cognitive decline and hearing loss are much simpler. Hearing loss and mental decline could have a common cause, such as inflammation. The lack of sensory input could also lead to structural changes in the brain (cascade hypothesis). Finally, over-diagnosis (harbinger hypothesis) may be an issue, though recent studies clearly confirm the cognitive load theory and cascade hypothesis and suggest that a combination of causes isn’t uncommon.

That’s where hearing aids come in. By addressing hearing loss, they can slow down cognitive decline, reduce the risk for late-life depression, and thus improve the quality of life; regardless of causes. While hearing aids are a key component, a holistic treatment should aim to address all underlying causes.

Hearing aids can slow down cognitive decline, reduce the risk for late-life depression, and thus improve the quality of life.

One thing to keep in mind is that hearing loss research overwhelmingly focuses on adults aged 60+. However, it doesn’t seem far-fetched to expect beneficial outcomes for younger people, too. At the very least, the life of a middle-aged adult with a full-time job, a family, and a circle of friends is too demanding to be held back by poor hearing.

Do you need a hearing aid?

If you’re unsure whether you could benefit from a hearing aid, get a hearing test or take a free online hearing screener. This will reveal your level of hearing loss and whether it’s time to get a hearing aid. Should you need one, we recommend seeing an audiologist for an audiogram and a custom fitting of your hearing aid.

Keep in mind that hearing aids can only address hearing loss in the frequency range below 8,000Hz. They’re best at amplifying the volume within that range, but only as long as you can actually hear those frequencies.

Frequently asked questions about hearing aids

Yes, most modern hearing aids support Bluetooth, some also carry a telecoil. A telecoil (aka t-coil) lets your hearing aid receive wireless audio transmissions from assistive listening systems (ALS). You’ll find ALS in many public venues. With a Bluetooth-enabled hearing aid, you should be able to stream audio from your Android 10+ smartphone or iPhone; watch out for ASHA and MFi protocol support, respectively. Soon, Bluetooth 5.2 LE will replace telecoil in public spaces, but you’ll need both a phone and hearing aids that support the new protocol.

According to the NIH, “OTC hearing aids are a new category of hearing aids that consumers will soon be able to buy directly, without visiting a hearing health professional.” These hearing devices present a more affordable alternative to standard hearing aids, which typically come bundled with professional services, such as regular examinations and maintenance. The FDA is currently working to pass regulations to permit the sale of OTC hearing aids. OTC hearing aids are suitable for people with mild-to-moderate hearing loss and will feature options for users to self-fit the device, i.e., adjust the settings to compensate for their unique hearing loss profile.

Traditionally, a hearing aid fitting consists of an ear exam, a hearing test, the physical fitting of a suitable hearing aid, the programmatic fitting of that device based on your hearing test results, followed by real-ear-measurements to ensure the fitting was successful. Once OTC hearing aids are available, you’ll follow an FDA-approved protocol to self-fit the device. For optimal results, you might still want to get an ear exam and a standard hearing test, prior to purchasing an OTC hearing aid.

Yes, wearing a hearing aid can quiet your tinnitus. Many hearing aids feature tinnitus maskers that play white noise to block out the tinnitus. While hearing aids can bring relief, they’re unlikely to cure tinnitus. Moreover, tinnitus can have many organic causes, such as blood vessel conditions, temporomandibular (jaw) joints disorders, or abnormal inner ear fluid pressure (Meniere’s disease), which is why you should consult a medical doctor before seeking self-treatment.

Hearing aids generally don’t use Wi-Fi. Many modern hearing aids, however, support Bluetooth for connecting to a mobile app or streaming audio.

Hearing aids don’t replace sounds or frequencies, but they can make frequencies of up to 8,000Hz louder. This doesn’t fix tinnitus, as it doesn’t restore the damaged hair cells in the cochlea. The loss of the hair cells means that your ear (and thus your brain) physically can’t hear the affected frequencies. This physical damage usually affects frequencies well above 8,000Hz.

A young human ear can hear up to 20,000Hz. When we age, we gradually lose the highest frequencies first and by our 40s have lost hearing for frequencies above 15,000Hz. Losing the ability to hear these frequencies, especially when it happens due to trauma, such as noise-induced hearing loss caused by headphones, can lead to tinnitus.