All products featured are independently chosen by us. However, SoundGuys may receive a commission on orders placed through its retail links. See our ethics statement.

Apple's AirPods Pro 3 Live Translation has a sharing problem

September 16, 2025

Editor’s Note: This article was updated after publication to reflect that Live Translation will be available on multiple AirPods models (AirPods Pro 2, AirPods Pro 3, and AirPods 4 with ANC), not exclusively on AirPods Pro 3 as initially reported.

Apple’s newly announced AirPods Pro 3 bring Live Translation to the forefront, promising to break down language barriers with hands-free, real-time translation powered by Apple Intelligence. The feature supports English, French, German, Portuguese, and Spanish at launch, with Italian, Japanese, Korean, and Chinese coming later this year. However, before thinking that you can completely rely on this feature to navigate foreign lands, there is an awkward snag that can come up in practice, and we’ve seen it before.

Apple’s press release reveals the core limitation: “It’s even more useful for longer conversations when both users are wearing their own AirPods with Live Translation enabled from their iPhone.” This isn’t just a suggestion—it’s a requirement for true bidirectional communication. Like Samsung’s Galaxy AI Interpreter, which we covered last year, Apple’s Live Translation is inherently designed around individual ownership rather than shared communication.

Translation for me, text for thee.

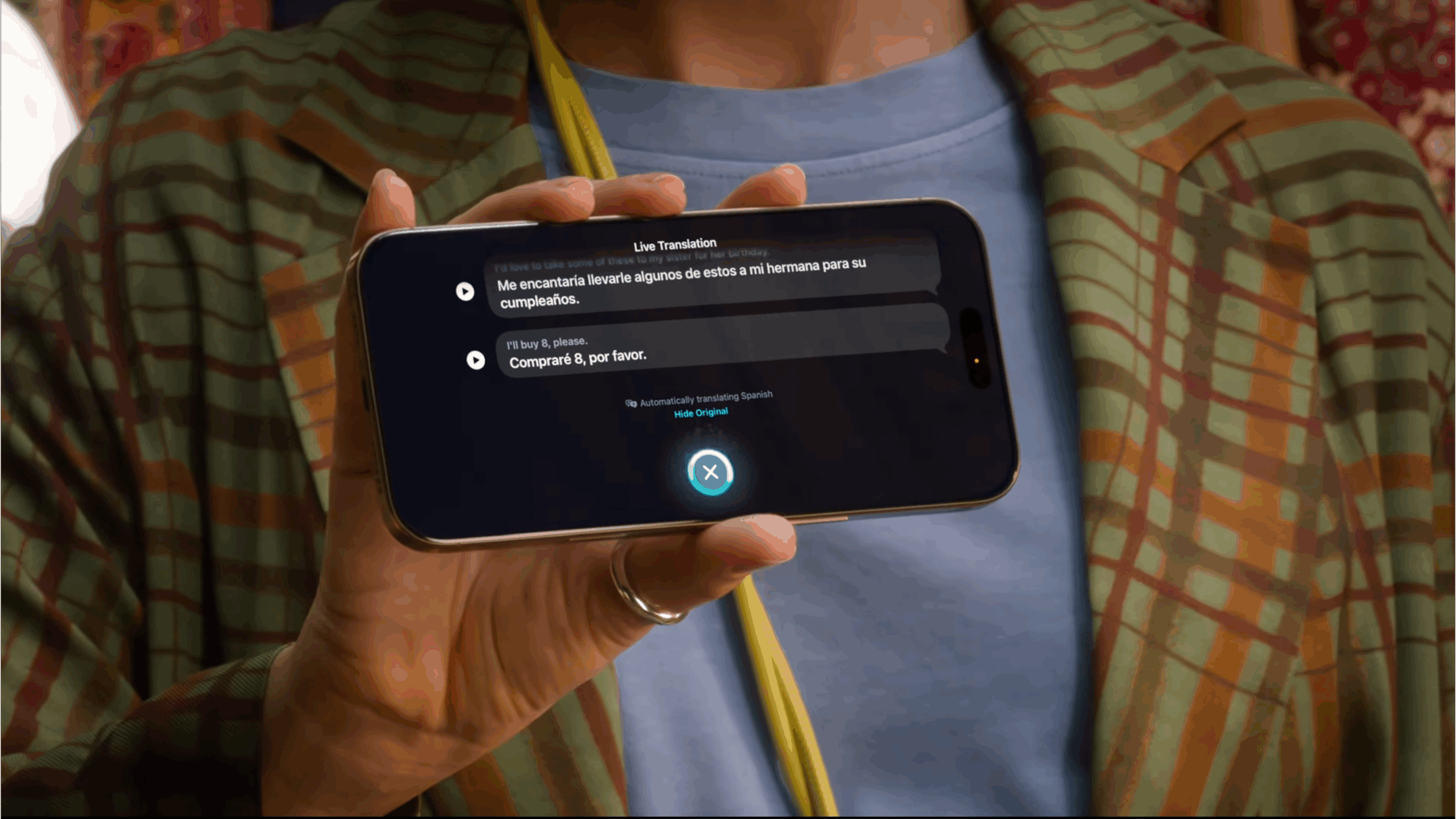

When only one person has AirPods Pro 3, the feature becomes decidedly one-sided. Apple acknowledges this by offering iPhone display mode, where “there’s an option to use iPhone as a horizontal display, showing the live transcription of what the user is saying in the other person’s preferred language.” The other person then speaks back, and their response gets translated into the AirPods wearer’s preferred language.

This creates the same uncomfortable dynamic we identified with Samsung’s Galaxy Buds3 Pro—an audio privilege where only the AirPods owner gets the seamless listening experience while their conversation partner is relegated to reading text on a screen or listening through phone speakers. I’m sure anyone who has traveled extensively can guess how hard it can be to hear a robotic voice through a phone speaker in busy markets or transit stations.

Apple’s answer is straightforward: both people need their own AirPods. Thankfully, the feature isn’t limited to just the AirPods Pro 3 as we initially thought. Live Translation will also work on AirPods Pro 2 and AirPods 4 with Active Noise Cancellation—basically any AirPods with the H2 chip and ANC capabilities.

Apple's answer is simple: both people need AirPods.

This approach makes business sense for Apple—selling two pairs of earbuds instead of one—but it creates barriers to adoption in real-world scenarios. Not everyone with whom you might want to have a translated conversation will conveniently own compatible Apple hardware.

I get that sharing earbuds with a stranger can be gross, but consider some practical scenarios where it would be acceptable: learning a language with a friend, sharing earbuds with a partner to explore a museum, or collaborating with an international colleague. In each case, the feature’s usefulness diminishes significantly when only one person can access the audio translation.

When it came to the Galaxy Buds 3 Pro, 58% of our poll respondents agreed that sharing translated audio between two people using one pair of earbuds is an essential feature, and it’s unfortunate to see the same limitation in Apple’s implementation. I think translation features should facilitate natural conversation flow, not create technological divides between participants.

Should Apple update the Live Translation feature to allow sharing translated audio between two people using one pair of AirPods Pro 3?

Both Apple and Samsung have positioned their translation features as ecosystem exclusives, tied to specific hardware combinations. Apple’s Live Translation requires compatible AirPods Pros and an iPhone running iOS 26 and later, while Samsung’s Interpreter works with new Galaxy Buds and Galaxy phones. This creates a fragmented landscape where seamless translated conversations are only possible within matching technology ecosystems.

The irony is stark: features designed to break down language barriers are simultaneously creating technological barriers. True conversation requires mutual understanding, but these implementations privilege the device owner while leaving their conversation partner with a diminished experience.

As it is, Live Translation will be best suited for single usage in specific scenarios, such as understanding announcements, lectures, or following tour guides. Apple’s AirPods Pro 3 will excel at helping you understand the world around you. Whether they’ll help you truly communicate with it remains another question entirely.

Thank you for being part of our community. Read our Comment Policy before posting.